International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 1

ISSN 2229-5518

Real-Time Static and Dynamic Hand

Gesture Recognition

Angel, Neethu.P.S

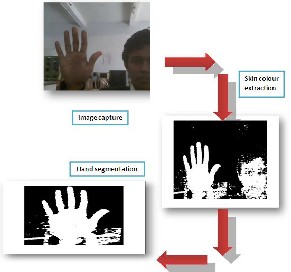

Abstract: Real-time, static and dynamic hand gesture recognition affords users the ability to interact with computers in more natural and intuitive ways. The gesture can be used to communicate much more information by itself compared to computer mice, joysticks, etc. allowing a greater number of possibilities for computer interaction. The purpose of this work is to design, develop and study a practical framework for real-time gesture recognition that can be used in a variety of human-computer interaction applications. The system include the processes of image acquisition, hand segmentation, hand feature extraction, gesture recognition, etc.

Index Terms- Adaptive skin color model, hand gesture recognition, motion detection, motion history image.

—————————— ——————————

ith the massive influx of computers in society and the increasing importance of service sectors in many of industrialized nations, the market for robots in

conventional applications of manufacturing automation is reaching saturation, and the research on robotics is rapidly proliferating in the field of service industries [4]. Service robots operate in dynamic and unstructured environment and interact with people who are not necessarily Krueger as a new form of human-computer interaction in the middle of the seventies and there has been a growing interest in it recently. As a special case of human-computer interaction, human robot interaction is imposed by several constraints the background is complex and dynamic; the lighting condition is variable; the shape of the human hand is deformable; the implementation is required to be executed in real time and the system is expected to be user and device independent.

Numerous techniques on gesture-based interaction

have been proposed, but hardly any published work fulfills all the requirements R.Kjeldsen and J.Kender presented a real-time gesture system which was used in place of the mouse to move and resize windows. In this system, the hand was segmented from the background using skin color and hand’s pose by neural network.

skilled in communicating with robots. Friendly and

cooperative interface is thus critical for the development of service robots. Gesture-based interface holds the promise of making human-robot interaction more natural and efficient.

————————————————

Angel is currently pursuing masters degree program in Communication

Systems in Hindustan University, India, PH-9003035783. E-mail: angel.messenger822@gmail.com

Mrs.Neethu.P.S is currently assistant professor in electronic and

Instrumentation Engineering in New Prince Shri Bhavani College of

Engineering and Technology, India. E-mail: ps.neethu@gmail.com

Gesture-based interaction was firstly proposed by M. W.

A drawback of the system is that its hand tracking has to be specifically adapted for each user. The Perseus system developed by R. E. Kahn was used to recognize the pointing gesture.

In the system, a variety of features, such as intensity, edge, motion, disparity and color has been used for gesture recognition [3]. This system was implemented only in a restricted indoor environment. In the gesture-based human-robot interaction system of J. Triesch and C.Ven Der Malsburg, the combination of motion, color and stereo cues was used to track and locate the human hand, and the hand posture recognition was based on elastic graph matching. This system is prone to noise and sensitive to the change of the illumination.

This section describes the implementation details of the Real time static and dynamic hand gesture recognition system. The system is subdivided into in to three independent sub systems

They are

1. Static hand gesture recognition system.

2. Dynamic hand gesture recognition system.

3. Virtual mouse system.

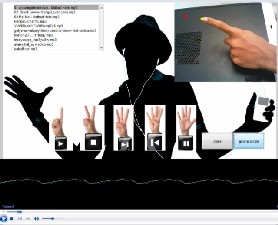

The three systems work independently and they are selected using a graphical user interface as in fig1. The static hand gesture recognition system detects the number of fingers in the hand using k curvature algorithm.

Dynamic hand gesture recognition system track the

hand motion, that is it identify whether hand moves right, left, up or down [2]. And Virtual mouse system changes the mouse position as the hand moves. Both dynamic and virtual mouse systems are implemented using the centroid

tracking method.

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 2

ISSN 2229-5518

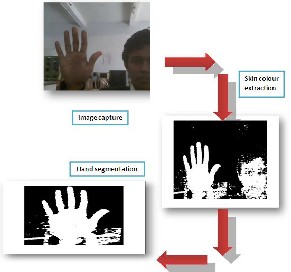

The static hand gesture recognition is used to find out the static hand movements like the number of fingers in the hand and performs application according to that.

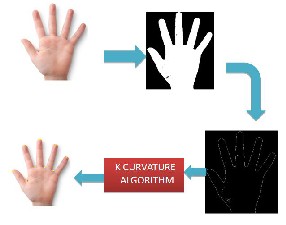

The peaks and valleys are extracted using the k curvature method (as explained above). Using the co- ordinate values of tips and valleys we plotted the captured image.

From the number of peaks and valleys we can identify

the number of fingers in the current hand gesture.

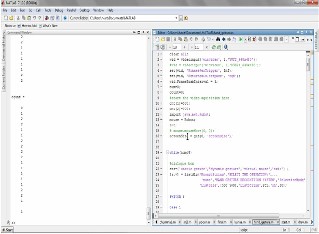

The K curvature method is used to find the static hand gesture in the system as in fig3 that is the count of hand fingers. The K curvature method by using k curvature algorithm as in fig4 will helps to identify the peaks and valleys of the binary image. Using the number of these peaks and valleys we can identify the gesture.

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 3

ISSN 2229-5518

MUSIC PLAYER

ActiveX is a Microsoft Windows protocol for component integration. With help of ActiveX,it is possible to integrate matlab and Microsoft media player .

Han = actxcontrol('WMPlayer.OCX.7‘)

It will create an object of WMPlayer. By changing the pathname and filename we can change the song. We assign different operation for each hand gesture.

Dynamic hand gesture is used to detect the moving hand gestures like waving of hands etc. Dynamic hand gesture recognition system track the hand motion, that is it identify

whether hand moves right, left, up or down.

The centroids of the binary image can be calculated using the matlab function regionprops(BW, properties) here the properties will be centroid. The result will be the vertical and horizontal (x,y) coordinates of the centroids as in fig7.

Since the input binary image BW will contain only one white region(hand extracted image).the result of the function regionprops(BW, properties) will be the centroid of the hand [1],[2].

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 4

ISSN 2229-5518

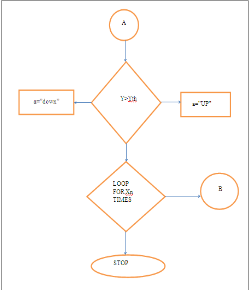

By passing this centroid value to the java.awt.Robot we can change the position of the mouse pointer. If the x coordinate of the centroid cross a threshold value then the dynamic gesture ‘right’ can detect else ‘left’. Similarly if y coordinate cross a threshold the ‘up’ can detect else

‘down’as in Fig.8.

VIRTUAL MOUSE

The application of the dynamic hand gesture is virtual mouse in which the mouse functions according to our hand movements as in fig8.

In this section we represent the recognition results of real time static and dynamic hand gesture recognition systems.

In this paper, the work is completely done by using MATLAB.The system consist of three independent units static ,dynamic and virtual mouse. The static hand gesture recognition system uses K curvature algorithm and other two system uses the centroid measuring and tracking. The performance of the designed system has been verified using hands of different people. The effectiveness of the design in a real world situation has been demonstrated by a physical implementation of the system.

The major extension to this work can be done to make the system able to work at much complex background and compatible at different light conditions. It can be made as an effective user interface and which can include all mouse functionalities.

We wish to thank Prof.K.J.Wilson for his support and guidance

REFERENCES

[1] BurakOzer, Tiehan Lu, and Wayne Wolf, “Design of a Real-Time Gesture Recognition System,” IEEE Signal Processing Magazine, 2005.

[2] Chan Wa Ng, S. Rangganath, “Real-time gesture recognition system and application”. Image and Vision computing (20): 993-1007, 2002.

[3] Chen-Chiung Hsieh and Dung-Hua Liou, “A Real Time Hand Gesture Recognition System Using Motion History Image,“ 2nd International Conference on Signal Processing Systems (ICSPS),2010.

[4] D. H. Liou, “A real-time hand gesture recognition system by adaptive skin- color detection and motion history image,” Master Thesis of the Dept. of Computer Science and Engineering, Tatung University, Taipei, Taiwan,2009.

[5] Kwang-Ho Seok, Chang-Mug Lee, Oh-Young Kwon, Yoon Sang Kim, ” A Robot Motion Authoring using Finger-Robot Interaction”, Fourth International Conference on Computer Sciences and Convergence Information Technology,2009.

[6] Nguyen Dang Binh, Enokida Shuichi, Toshiaki Ejima, ” Real-Time Hand Tracking and Gesture Recognition System”, GVIP 05 Conference, CICC, Cairo, Egypt, 19-21

December,2005.

[7] S.M.Hassan Ahmeda, Todd C.Alexanderb, and Georgios C. Anagnostopoulosb, ”Real-time, Static and Dynamic Hand Gesture Recognition for Human- Computer Interaction”, IEEE,2006.

[8] T. Maung, “Real-time hand tracking and gesture

recognition system using neural networks,” Proc. of

World Academy of Science, Engineering and

Technology, vol. 38, pp. 470-474, Feb. 2009.

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 5

ISSN 2229-5518

[9] Vladimir I. Pavlovic, Rajeev Sharma, Thomas S Huang, “Visual Interpretation of Hand Gestures for Human- Computer Interaction: A review” IEEE Transactions of pattern analysis and machine intelligence, Vol 19, NO 7, July,1997.

[10] XIAOMING YIN,”Hand Posture Recognition in Gesture-Based Human-Robot Interaction,” International journal of Robotics and Autonomous System, 2006.

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 4, Issue3, March-2013 6

ISSN 2229-5518