International Journal of Scientific & Engineering Research, Volume 5, Issue 4, April-2014 943

ISSN 2229-5518

GESTURE RECOGNITION: PROLOGUE

Manisha chahal, B Anil Kumar, Kavita Sharma

Abstract—This paper discuss of basic of gesture recognition (GR) concept its various types, methods and approaches for recognizing a gesture as well as its application. Basically Gesture Recognition means identification and recognition of gestures of body motion, which can originate from face or hand. Gesture recognition it is also a topic in computer science engineering and language technology which interpret human gestures via mathematical algorithms. Recognition of human activity is an attractive goal for computer vision. The task of gesture recognition is made challenging due to complex background, presence of nongesture hand motions, and different illumination environments. Currently GR focuses in the field include emotion recognition from the face and hand gesture recognition .GR enables humans to interface with the machine (HMI) and interact naturally without any mechanical devices. The applications of gesture recognition are infinite, ranging from sign language through medical rehabilitation to virtual reality.

Index Terms— Image Processing and Pattern Recognition, HMI, Gesture recognition (GR) , neural network learning rules, static gesture, dynamic gesture, skin detection , edge detection

—————————— ——————————

HIS “Gesture” stating, “the notion of gesture is to embrace all kinds of instances where an individual engages in movements whose communicate intent is paramount, manifest and openly acknowledged”. Gesture is a stochastic process.[1] it can be viewed as random trajectories in parame- ter spaces which describe hand or arm spatial states. It is also said to be a language technology with the goal of interpreting human gestures via some mathematical algorithms. Gestures can originate from any bodily motion or state but commonly originate from the face or hand. The identification and recog- nition of posture, gait, proxemics, and human behaviors is also the subject of gesture recognition techniques.[2] this paper deals with various gesture recognition techniques. Using this concept it is possible to point a finger at the computer screen so that the cursor will move accordingly. This could potentially make conventional input devices such as mouse, keyboards and even touch-screens redundant. The primary goal of gesture recognition research is to create a system which can identify specific human gestures and use them to convey information or for device control. Gesture Recognition is the act of interpreting motions to determine such intent.To identifies and recognize these gestures there are different

ways such as:

• Hand Gesture Recognition

• Face or Emotion Gesture Recognition

• Body Gesture Recognition

————————————————

• Manisha chahal is currently pursuing masters degree program in electon- ics engineering in Lingayas University, Faridabad,India.. E-mail: chahalmanisha25@gmail.com

• B. Anil Kumar is currently Working as Asst. Prof. in electrical and elec-

tronics dept. in Lingayas GVKS IMT, Faridabad,Indai. E-mail:

• Kavitha Sharma is currently Working as Asst. Prof. in electronics dept. in

Lingayas University, Faridabad,Indai

The meaning of gesture can be dependent on the following: [3] (i) Spatial information

(ii) Pathic information

(iii) Symbolic information

(iv) Affective information

In computer interfaces, the two types of gestures are distin- guished. When we consider online gestures, which can also be regarded as direct manipulations like scaling and rotating and in contrast offline gestures which usually processed after the interaction is finished. The main classification of gesture is

It can be described in terms of hand shapes. Posture is the combination of hand position, orientation and flexion ob- served at some time instance. Static gestures are not time vary- ing signals.eg. Facial information like a smile or angry face.[4]

Dynamic Gesture is sequence of postures connected by mo- tions over a short time span. In video signal the individual frames define the posture and the video sequence define the gesture. eg. Taking the recognized temporal to interact with computer.[4]

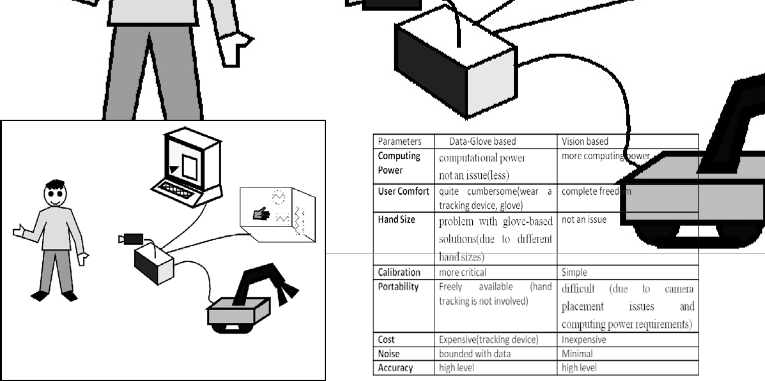

For any system the first step is to collect the data necessary to accomplish a specific task. For hand posture and gesture recognition system different technologies are used for acquir- ing input data. Present technologies for recognizing gestures can be divided into vision based, instrumented (data) glove, and colored marker approaches. Figure 1 shows an example of these technologies.

IJSER © 2014 http://www.ijser.org

Int

ISS

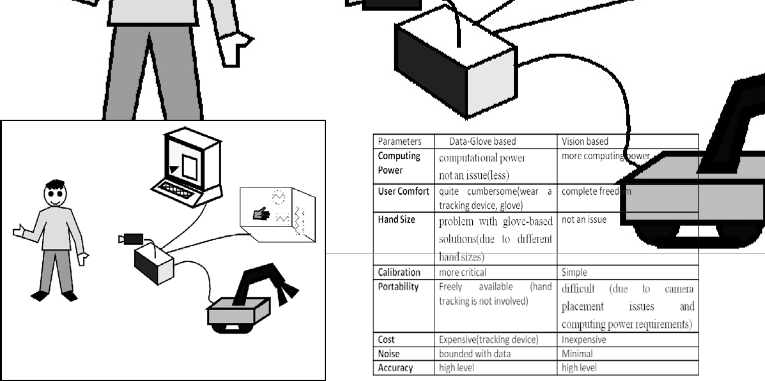

TABLE 1

BASIC GESTURE RECOGNITION APROACH

Fig. 1. recognizing gestures technologies

In vision based methods the system requires only camera(s) to capture the image required for the natural interaction between human and computers and no extra devices are needed. Alt- hough these approaches are simple but a lot of gesture chal- lenges are raised such as the complex background, lighting variation, and other skin color objects with the hand object, besides system requirements such as velocity, recognition time, robustness, and computational efficiency .[6]

Instrumented data glove approaches use sensor devices for capturing hand position, and motion. These approaches can easily provide exact coordinates of palm and finger’s location

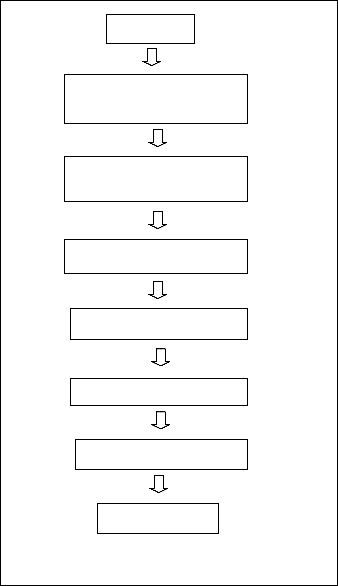

Most of the researchers classified gesture recognition system into mainly four steps after acquiring the input. These steps are: Extraction Method or modeling, features estimation and extraction, and classification or recognition as illustrated in Figure 2.

and orientation, and hand configurations .however these ap- proaches require the user to be connected with the computer physically .which obstacle the ease of interaction between us- ers and computers, besides the price of these devices are quite expensive it is inefficient for working in virtual reality .[7]

Marked gloves or colored markers are gloves that worn by the

Data

Acquisition

Gesture

Modeling

Feature

Extraction

Recognition stage

human hand with some colors to direct the process of tracking the hand and locating the palm and fingers, which provide the ability to extract geometric features necessary to form hand shape .The color glove shape might consist of small regions with different colors or as applied in where three different colors are used to represent the fingers and palms, where a wool glove was used. The amenity of this technology is its simplicity in use, and cost low price comparing with instru- mented data glove .However this technology still limits the naturalness level for human computer interaction to interact with the computer.[8]

Fig 2: gesture recognition system componets

data or gesture, the feature extraction should be smooth since

the fitting is considered the most difficult obstacles that may

face; these features can be location of hand/palm/fingertips,

joint angles, or any emotional expression or body movement.

The extracted features might be stored in the system at train-

IJSER © 2014 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 4, April-2014 945

ISSN 2229-5518

ing stage as templates or may be fused with some recognition devices such as neural network, HMM, or decision trees which have some limited memory should not be overtaken to re- member the training data.

certain pose or configuration

Template matching

Neural networks

Pattern recognition techniques

and poststrock phases.

Time compressing templates

Dynamic time warping

Hidden Markov Models

Conditional random fields

Time-delay neural networks

Particle filtering and condensation algorithm

Finite state machine

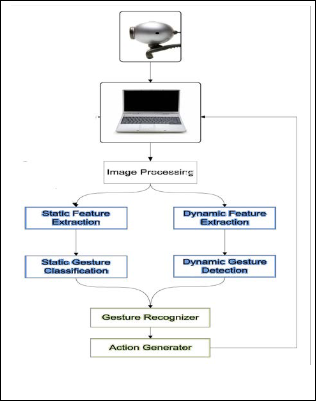

Fig. 3. Overview of the system's components

Fig 3 shows the Overview of the system's various components. The process begins with the computer system capturing a frame from the web camera, which is then processed to detect candidate regions of interest. After a hand is found in a region of interest it is tracked by the Hand Tracker all subsequent

processing is done on this region. While being tracked static and dynamic features are collected, with the static features being passed to an Neural Network classifer and the dynamic features analyzed to obtain high level features. The static and dynamic information is then passed to the Gesture Recognizer, which communicates the type of gesture to the Action Genera- tor. The action generator passes the necessary commands to the operating system to create the end result.

Recently, several approaches have been proposed to imple- ment hand-gesture to speech systems [9], [10], [11]. The three most popular models of sensing gloves are VPL Data Glove is Virtex Cyber Glove [12], and Matte1 Power Glove. They all have sensors that measure some or all of the finger joint an- gles. Each has its own advantages and disadvantages. The use of neural networks for the recognition of gestures has been examined by several researchers. Neural networks do not make any assumption regarding the underlying probability density functions or other probabilistic information about the pattern classes under consideration. They yield the required decision function directly via training a two layer backpropa- gation network with sufficient hidden nodes has been proven to be a universal approximator [13],[14]. Wibur has trained a neural network to recognize approximately 203 hand gestures derived from the American Sign Language [15]

in neural network The supervised decision-diected learning

algorithm is used for generates a two-layer feedforward net-

work in a sequential manner to add hidden nodes. Training

patterns are divided into two types first is a "positive type"

from which we want to extract the "concept" and second is a "negative type" which provides the counterexamples with re- spect to the "concept". A "seed" pattern is used as the base of the "initial concept.The seed pattern is arbitrarily chosen from the positive class. Then we try to generalize the initial concept to include next positive pattern. After this, we have to check whether there is any negative pattern in order to prevent the occurrence of "overgeneralization". The following step is to fetch next positive paittern and to generalize the initial con- cept to include the new positive pattern. This process involves growing the original hyperrectangle to make it larger to in- clude the new positive pattern.A more detailed description of the training procedure is given in [15],[16].

We will define a learning rule as a procedure for modifying the weights and biases of a network. The learning rule is ap- plied to train the network to perform some particular task In supervised learning, the learning rule is provided with a set of examples of proper network behavior where is an input to the network, and is the corresponding correct output. As the in- puts are applied to the network, the network outputs are compared to the targets. The learning rule is then used to ad- just the weights and biases of the network in order to move the network outputs closer to the targets. The perceptron learning rule falls in this supervised learning category. Linear networks can be trained to perform linear classification with the function train. This function applies each vector of a set of

IJSER © 2014 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 4, April-2014 946

ISSN 2229-5518

input vectors and calculates the network weight and bias in- crements due to each of the inputs. Then the network is ad- justed with the sum of all these corrections. We will call each pass through the input vectors an epoch. This contrasts with adapt, which adjusts weights for each input vector as it is pre- sented. Below in figure 4 we can see a flow chart of the percep- tron algorithm. It is always operating on the same order. There is a graphical interface only for selecting the test set as it is the only user input

Read data

Define number of neurons and number of target

Initialize pre processing lay- er

Initialize learning layer

Train pereptron

Plot error

Select test data

Display output

Fig. 4. flow chart of the perceptron algorithm.

The rapid growing and available compute power, with ena- bling faster processing of huge data sheets, has facilitated the use of elaborate and diverse methods for data analysis and classification. At the same time, demands on automatic pattern recognition systems are rising enormously due to the availa- bility of large databases and stringent performance require-

ments. Here it is given a simplest algorithm used for pattern recognition. [17]

1. Convert the RGB image into gray scale image.

2. Segment the image into three equal parts

3. Take the right corner second level parte as left hand image

4. Take the left corner second level part as right hand image.

5. By considering threshold (120) and comparing with each

pixel we count the number of pixels in the all parts.

6. Based on the pixel count specification we classify either left hand or right hand is raised or both.

There we have two algorithms for hand gesture recognition

using MATLAB for Edge detection and Skin detection algo- rithms.

EDGE DETECTION

Following steps are used for detecting the edges:

1. Image capturing using a webcam or any camera.

2. Converting the captured image into frames.

3. Image pre-processing using Histogram Equalization.

4. Edge detection of the hand by using an algorithm like Can-

ny Edge Detection.

5. Enlargement of the edges of regions of foreground pixels by

using Dilation to get a continuous edge.

6. Filling of the object enclosed by the edge.

7. Storing the boundary of the object in a linear array.

8. Vectorization operation performed for every pixel on the boundary.

9. Detection of the fingertips.

10. Tracking of the fingertips in frames to determine the mo-

tion.

11. Identification of the gesture based the motion.

12. Insertion of the input stream into the normal input path of the computing device.

In this the images is first captured using a webcam, separated into frames and converted into grayscale format. The contrast is then improved using Histogram Equalization. After the

edges are detected, the images are dilated to fill up the broken edges. The images are then filled up using boundaries func- tion in MATLAB, and the boundary pixels are detected and stored sequentially in a linear array. The fingertips are then detected using vectorization technique and the gesture is rec- ognized by the system depending on the relative movement of the fingertips in the different frames.

SKIN DETECTION

Following steps are used for skin detection [19]

1. Image capturing using a webcam or any camera.

2. Converting the captured image into frames.

3. Skin color detection using hue and saturation values of var-

ious possible skin tones.

4. Storing the boundary of the object in a linear array.

5. Vectorization operation performed for every pixel on the

boundary.

6. Detection of the fingertips.

7. Tracking of the fingertips in consecutive frames to deter-

mine the motion.

8. Identification of the gesture based the motion.

IJSER © 2014 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 4, April-2014 947

ISSN 2229-5518

9. Insertion of the input stream into the normal input path of the computing device.

In Skin Detection Algorithm after the images are separated into frames, the skin detection algorithm is applied. The closed contour of the fingers is identified in this technique even in the presence of a noisy background and hence eliminates then the vectorization technique is used to detect the fingertips and the gesture is recognized by the system depending on the relative movement of the fingertips in the different frames [18]

There is a limited amount of studies in literature for the hand gesture recognition. Recognition methods, like in the detection procedure, are mainly realy on algorithms which need training or diferent environmental constraints. A clear summary of such algorithms are shown in table

This survey summarizes the techniques that have been used for hand localization and gesture classification in the gesture recognition literature, but shows that very little variety has been seen in the real-world applications used to test these techniques these techniques can be used in various of real time nature for ease of humanbeing. Applications that take ad- vantage of depth information in challenging environments (such as hand detection and gesture recognition low lighting, or gesture recognition with occlusions) are still missing,and work on this is ongoing and applications that test the limita- tions of depth sensors (such as tolerance to noise in depth im- ages, and detecting hands with limited range of motion or in close contact with objects).

Gestures are destined to play an increasingly important role in human-computer interaction in the future. Facial Gesture Recognition Method could be used in vehicles to alert drivers who are about to fall asleep. Area of Hand gesture based com- puter human interaction is very vast. Hand recognition system

can be useful in many fields like robotics, computer human interaction and so make hand gesture recognition offline sys- tem for real time will be future work to do. Support Vector Machine can be modified for reduction of complexity. Re- duced complexity provides us less computation time so we can make system to work real time.

First and foremost, the authors wish to thanks all the members of Centre of Excellence at Lingaya’s University. Authors want to thanks to all the members for the friendship support and motivation.

Finally great appreciation goes to parents for their love and support.

.

[1] [Gesture Recognition System @2010International Journal Of Com- puter Applications(0975-8887)Volume 1-No. 5.

[2] IEEE Transactions On Systems, Man, And Cybernetics—Part C: Ap- plications And Reviews, Vol. 37, No. 3, May 2007.

[3] “A Survey Of Hand Posture And Gesture Recognition Techniques And Technology” Joseph J. Laviola Jr. Brown University, NSF Sci- ence And Technology Center For Computer Graphics And Scientific Visualization Box 1910, Providence, RI 02912 USA

[4] “Static Hand Gesture Recognition Using Neural Network” By Haitham Hasan, S. Abdul Kareem In Springer Science + Business Media B.V. 2012 With DOI 10.1007/S 10462-011-9303-1

[5] “Dynamic Hand Gesture Recognition Using Predictive Eigen track- er” Kaustubh S. Patwardhan Sumantra Dutta Roy Department Of Electrical Engineering, IIT Bombay, Powai, Mumbai - 400 076, IN- DIA{Kaustubh, Sumantra}@Ee.Iitb.Ac.In

[6] “Vision Based Hand Gesture Recognition For Computer Interaction : A Survey” By Anupam Agarwal And Siddharth S.Rautaray In Springer Science +Business Media Dordrecht 2012,Artif Intell Rev,DOI 10.1007/S 10462-012-9356-9

[7] “An Approach To Glove-Based Gesture Recognition” Farid Parvini,

Dennis Mcleod, Cyrus Shahabi, Bahareh Navai, Baharak Zali, Shah- ram Ghandeharizadeh Computer Science Department University Of Southern California Los Angeles, California 90089-0781 [Fparvini,Mcleod,Cshahabi,Navai,Bzali,Shahram]@Usc.Edu

[8] “A Color Hand Gesture Database For Evaluating And Improving Algorithms On Hand Gesture And Posture Recognition” Farhad Dadgostar,Andre L.C.Barczak,Abdolhossein Sarrafzadeh Institute Of Information & Mathematical Sciences Massey University At Albany, Auckland, New Zealand

[9] J. Kramer, L. Leifer, "The talking glove : a speaking aid for nonvocal deaf and deaf-blind individuals," proc. of RESNA 12th Annual Con- ference, New Orloans, Louisiana, pp. 471-472, 1989.

[10] J. Kvamer and L. Leifer, "The talking glove : a speaking aid for non- vocal deaf and deaf-blind individuals," Roc. of the RESNA 12th An- nual Conf., New Orleans, Louisiana, pp. 471-472, 1989.

[11] Fels, S. Sidney, and Geofiey E. Hinton, "Glove-talk : a neural network

interface between a data-glove and a speech synthesizer," IEEE Trans. on Neural Networks, vol. 4, no. 1, pp. 2-8, Jan., 1993.

[12] Virtex Co., Company brochure, Standford, CA, October, 1992

IJSER © 2014 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 4, April-2014 948

ISSN 2229-5518

[13] G. Cybenko, "Approximation by superpositions of a sigmoid func- tion," Mathematics of Control, Signals, and Systems, no. 2, pp. 303-

314, 1989.

[14] K. Horn&, M. Stinchcombe, and H. White, "Multilayer feedforward

networks are universal approximators," Neural Networks, no. 2, pp.

359-366, 1989.

[15] M. C. Su, A Neural Network Approach to Knowledge Acquisition,

Ph.D. Dissertation, University of Maryland, August, 1993.

[16] M. C. Su, ''Use of neural networks as medical diagnosis expert sys- tems," in Computers in Biology and Medicine, vol. 24, no. 6, 1994.

[17] P.Vijaya Kumar ,N.R.V.Praneeth and Sudheer.V “Hand And Finger Gesture Recognition System for Robotic Application” International Journal of Computer Communication and Information System ( IJCCIS) Vol2. No1. ISSN: 0976–1349 July – Dec 2010

[18] A. Tognetti, F. Lorussi, M. Tesconi, et al, "Wearable kinestheticsys- tems for capturing and classifying body postureand gesture," 27th IEEE-EMBS Conf, pp. 1012- 1015,2005.

[19] Ying Wu and T. S. Huang, "Hand modeling, analysis and recogni-

tion," IEEE Signal Processing, vol. 18, no. 3, pp.51-60, May 2001.

IJSER © 2014 http://www.ijser.org